Running Llama 3.1 Using Ollama

In this blog post, we will continue from our previous setup and run the Llama 3.1 AI model using Ollama. We will execute the model within the Ollama container and interact with it via the command line and later via the API.

Start the Llama 3.1 Model

Assuming you already have Ollama running in a Docker container as described in the previous post, the next step is to start the Llama 3.1 model.

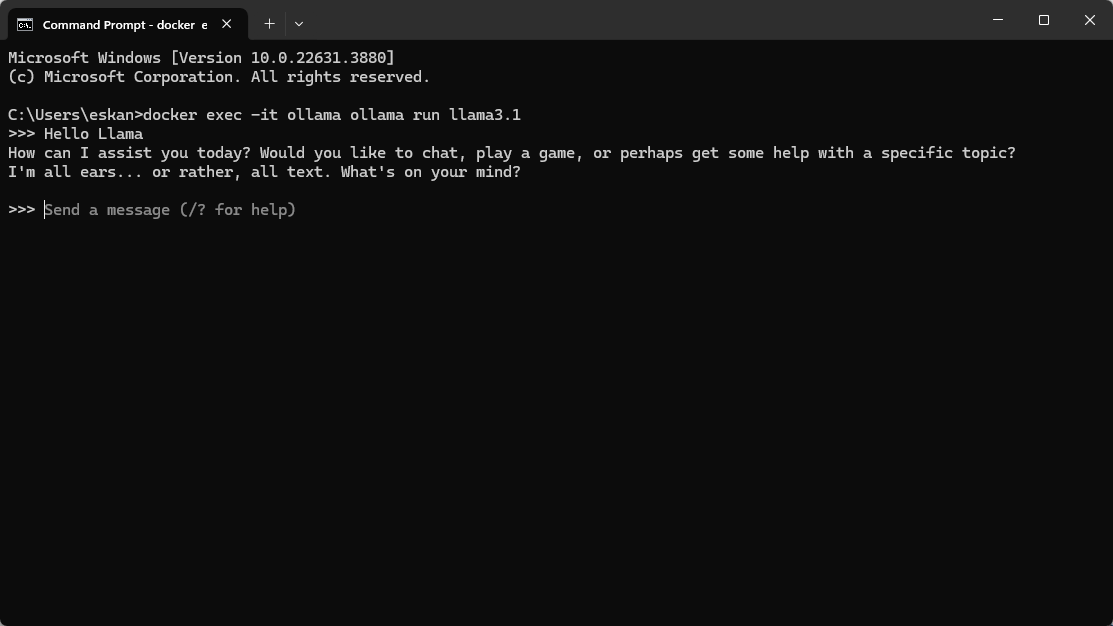

- Execute the Llama 3.1 Model: Open a terminal and run the command below to start the model within the Ollama container.

This command will download the Llama docker image and then initialize the Llama 3.1 model, making it ready for interaction.

docker exec -it ollama ollama run llama3.1Interact with Llama 3.1 Using the CMD

After running the previous command, you can immediately start interacting with the AI model.

Pretty cool… isn't it? No internet connection, no cloud payment to Google or AWS. Just your PC doing all the work for you!

Interact with Llama 3.1 Using the API

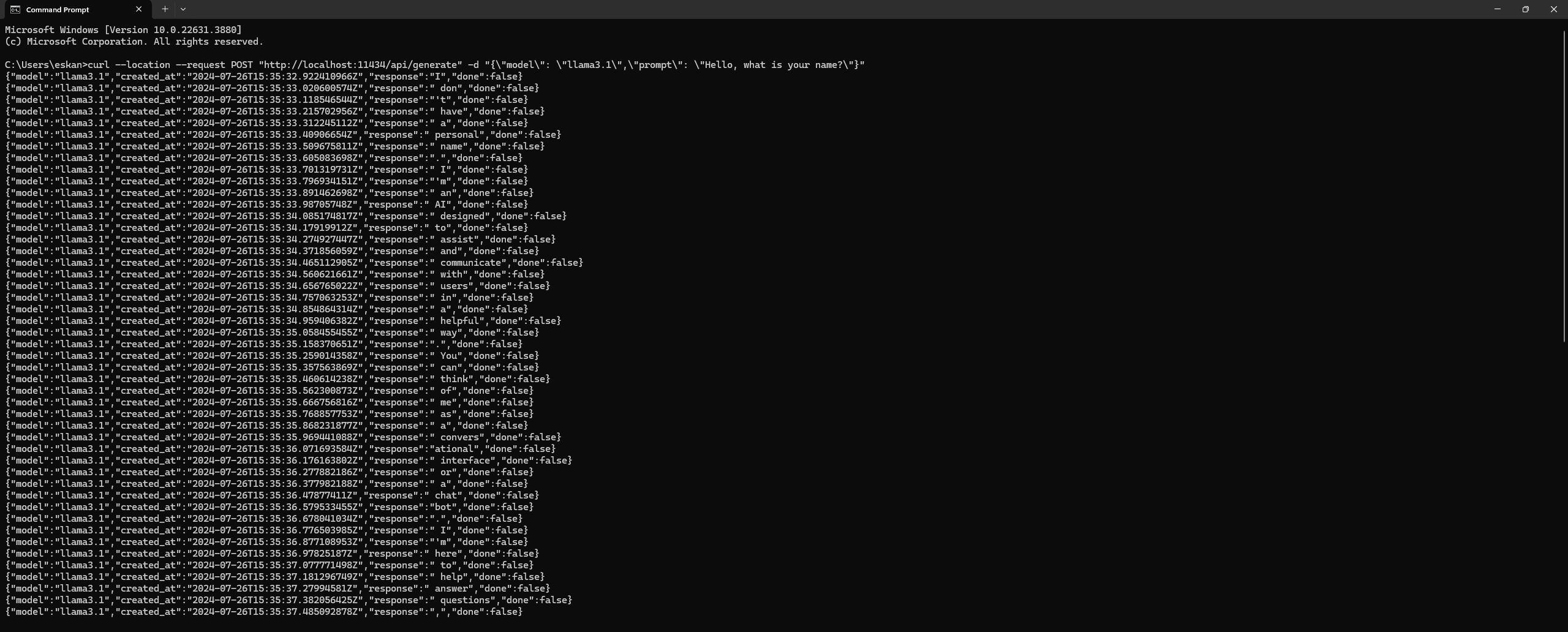

Another way of interacting with the model, is using Ollama's API. We'll use curl to send a request to the model.

Send a Request Using curlUse the following command to interact with the Llama 3.1 model:

curl --location --request POST "http://localhost:11434/api/generate" \

-d "{\"model\": \"llama3.1\",\"prompt\": \"Hello, what is your name?\"}"This command sends a POST request to the API endpoint with a prompt for the Llama 3.1 model to respond to. The model will process the prompt and return a response, which you should see in your terminal. It might look something like this:

To fully leverage the capabilities of Ollama and interact with the Llama 3.1 model in more advanced ways, you can refer to the full API documentation. The documentation provides detailed information on available endpoints, request formats, and example use cases.

You can access the full API documentation here.

Conclusion

In this blog post, we demonstrated how to run the Llama 3.1 AI model using Ollama on a Windows machine. By executing the model within the Ollama container and interacting with it through the API, you can leverage the power of Llama 3.1 for various AI tasks. Make sure to explore the API documentation to unlock the full potential of this powerful tool.